JS: Functions

Theory: Signals

The sort() method demonstrates well the importance and convenience of higher-order functions for everyday tasks. By describing the algorithm once, we can get different behaviors by specifying them right at the sorting location. The same applies to the map(), filter() and reduce() methods discussed above.

When using higher-order functions, it is customary to divide the task into subtasks and perform them one after another, building them into a chain of operations. This resembles dragging data through a transformation pipeline.

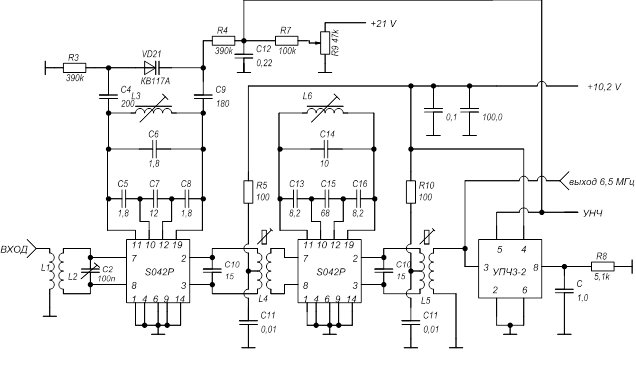

The SICP compares this approach to the way signals are processed when designing electrical circuits. The current flowing through the circuit goes through a chain of transducers: filters, noise suppressors, amplifiers, and so on. The voltage (and the current it generates) here represents data, and the transducers play the role of functions.

This is the main way of working with collections in JavaScript. But loops are almost not used because of much less flexibility, more code (which is more error-prone), and difficulties in dividing a complex algorithm into independent steps.

Suppose we write a function that takes a list of file system paths as input, finds files with .js extension case insensitive among them, and returns the names of those files. To solve this problem, we need the following functions:

- fs.existsSync(filepath) — checks if the file exists on the specified path

- fs.lstatSync(filepath).isFile() — checks if the object is a normal "regular" file (not a directory, link or other file type)

- path.extname(filepath) — extracts the extension from the filename

- path.basename(filepath) — extracts file name from the full path

The example above is a common loop-based solution. His algorithm can be described as follows:

- Reviewing each path

- If the current path is an ordinary file with a

.jsextension (not case-sensitive), then add to the resulting array

If you try to do the same using the reduce() method, you will get the same code that is identical to the loop solution. But if you think carefully, you can see that this task breaks down into two: filtering and mapping.

The code is slightly shorter (not including comments) and more expressive, but the main thing is not its size. As the number of operations and their complexity increase, code broken down in this way is much easier to read and analyze, since each operation is performed independently for the entire set at once. You have to keep fewer details in mind and you can immediately see how the operation affects all the data. However, learning how to break a task into subtasks is not as easy as it may seem, it takes some practice and skill before your code becomes digestible.

Note that here the filtering is broken up into three steps, not done in one. Given the brevity of the function definition in js, it is much better to split the checks into a larger number of filters than to make one complex filter.

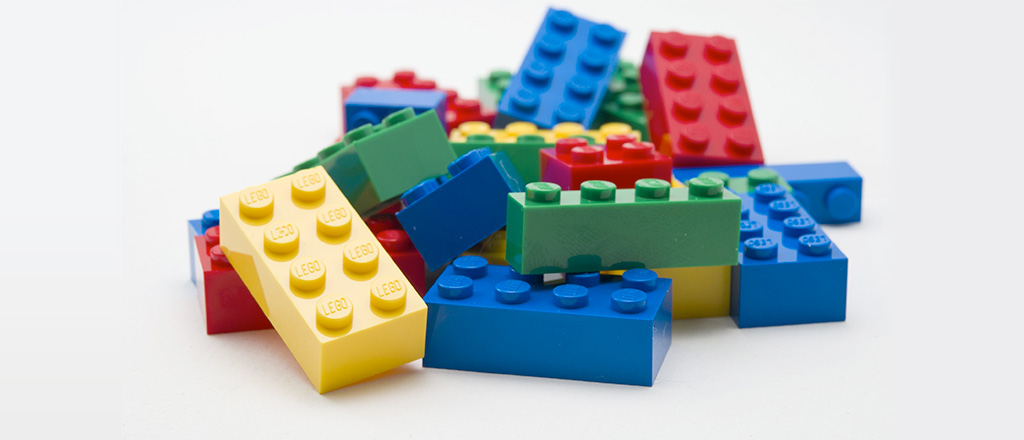

The very possibility of such a breakdown is based on a simple idea sometimes called "standard interfaces". It derives from the fact that both the function input and output should use the same kind of data, in this case an array. This allows you to connect functions and build chains that perform a large number of different tasks without having to implement new functions. The previously discussed operations - mapping, filtering, and aggregation - combine to solve the vast majority of collection processing tasks. We've all encountered something similar in our lives when assembling Lego constructors. A small number of primitive parts due to the same connections allows you to build structures of almost unlimited complexity.

In fact, such chains frequently end with aggregation, because aggregation reduces the collection to some final value.

Performance

The performance considerations were left out of the picture. Some of you may have guessed that for every call to a function that handles a collection, we traverse the entire list. The more such functions, the more traversing done. It would seem that the code slows down, so why do that? In practice, extra traversing is rarely a problem (see "The Mature Optimization Handbook"). Tasks that require simultaneous processing of tens or hundreds of thousands of elements are extremely rare. Most operations are performed on lists of up to thousands of items. And for a list like this, one traversing more or one less makes no difference.

But that's not the whole truth. There are special collections that do not perform operations at once when filtering, displaying, etc. are called. They accumulate the necessary actions, and during the first use, they do everything at once in one traversing. These are so-called "lazy collections."